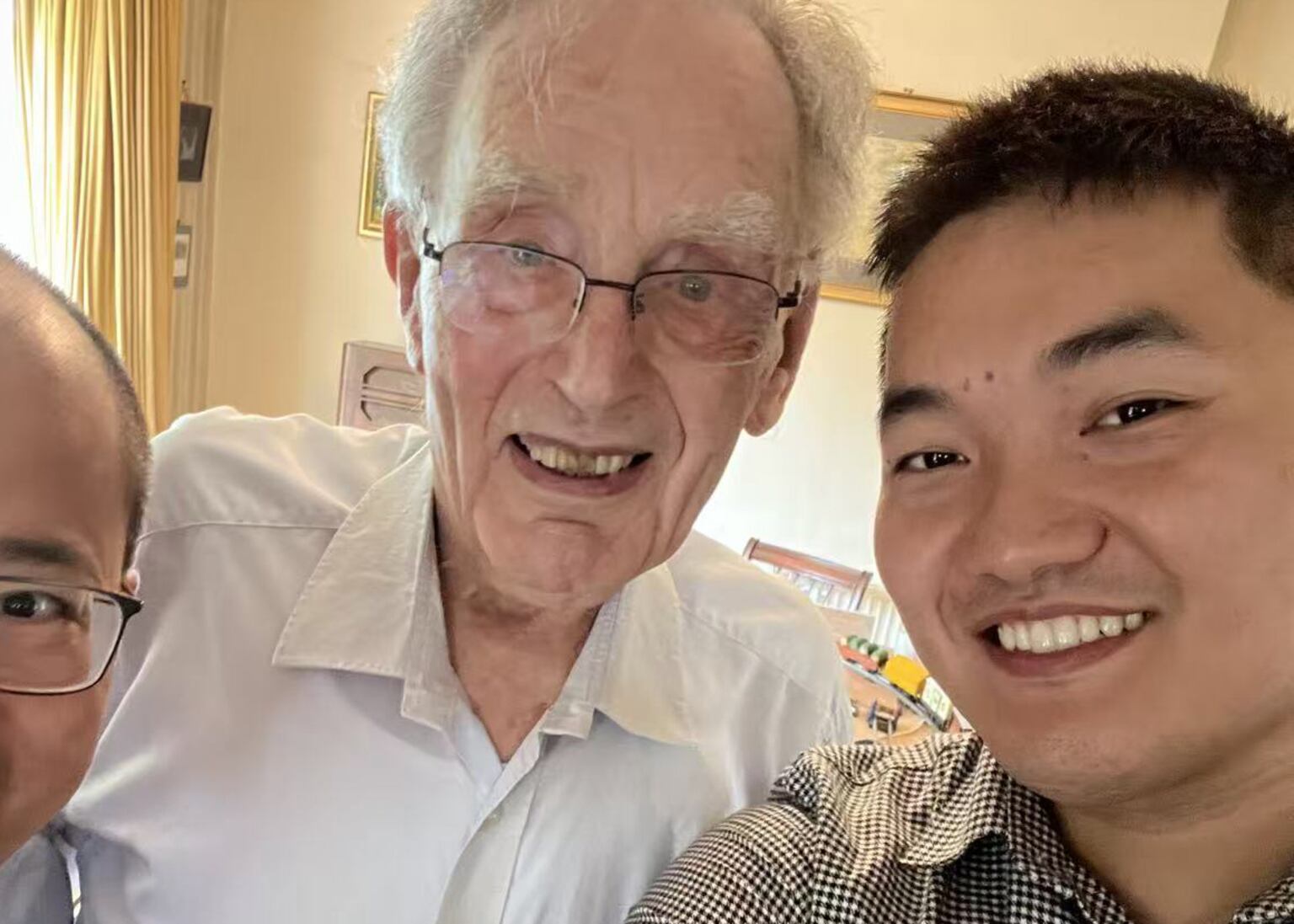

When a kind-hearted engineer noticed his 89-year-old mentor was unsteady on his feet, he sprang into action and created a futuristic shoe which could one day help him – and scores of other older people – keep their balance.

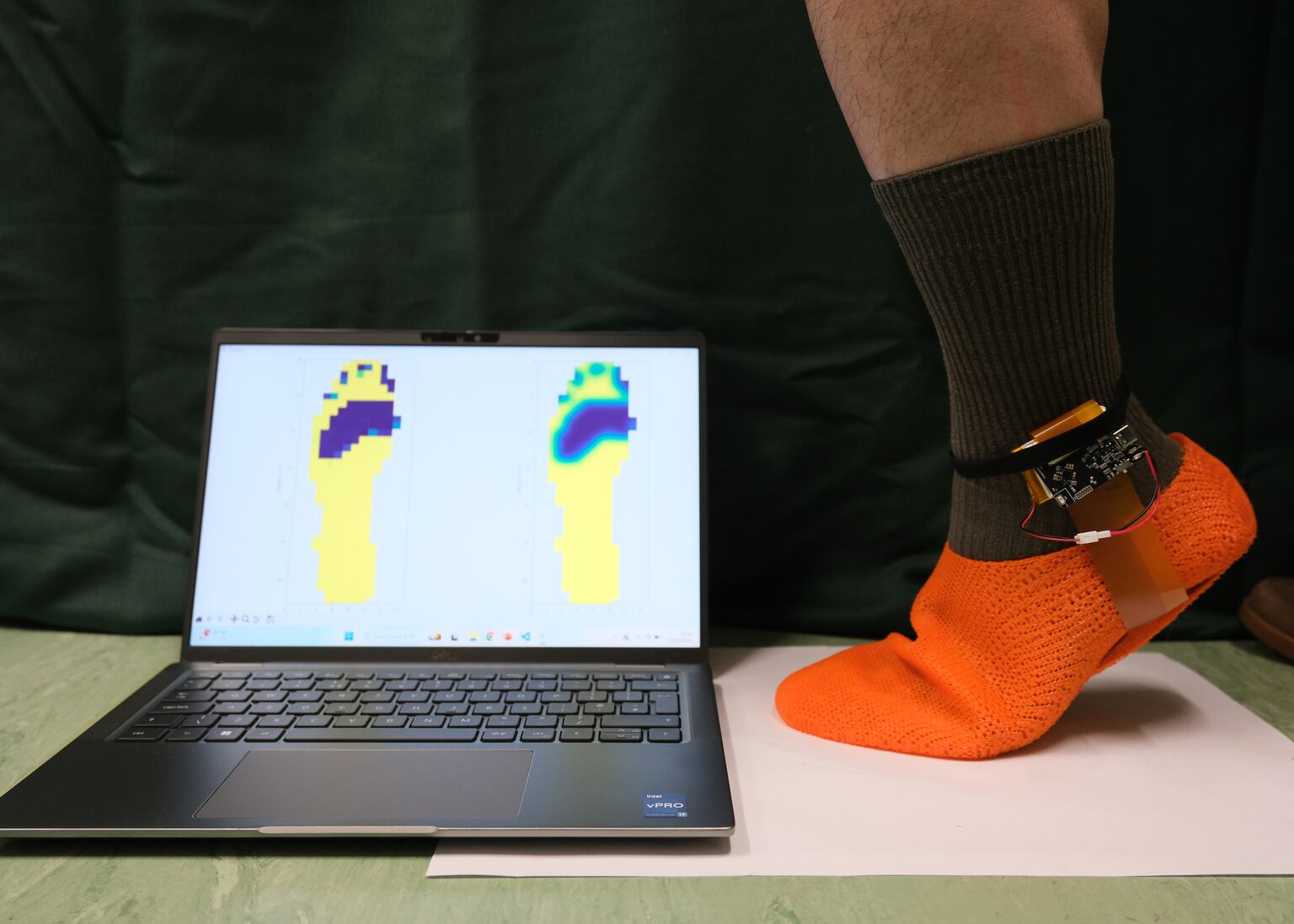

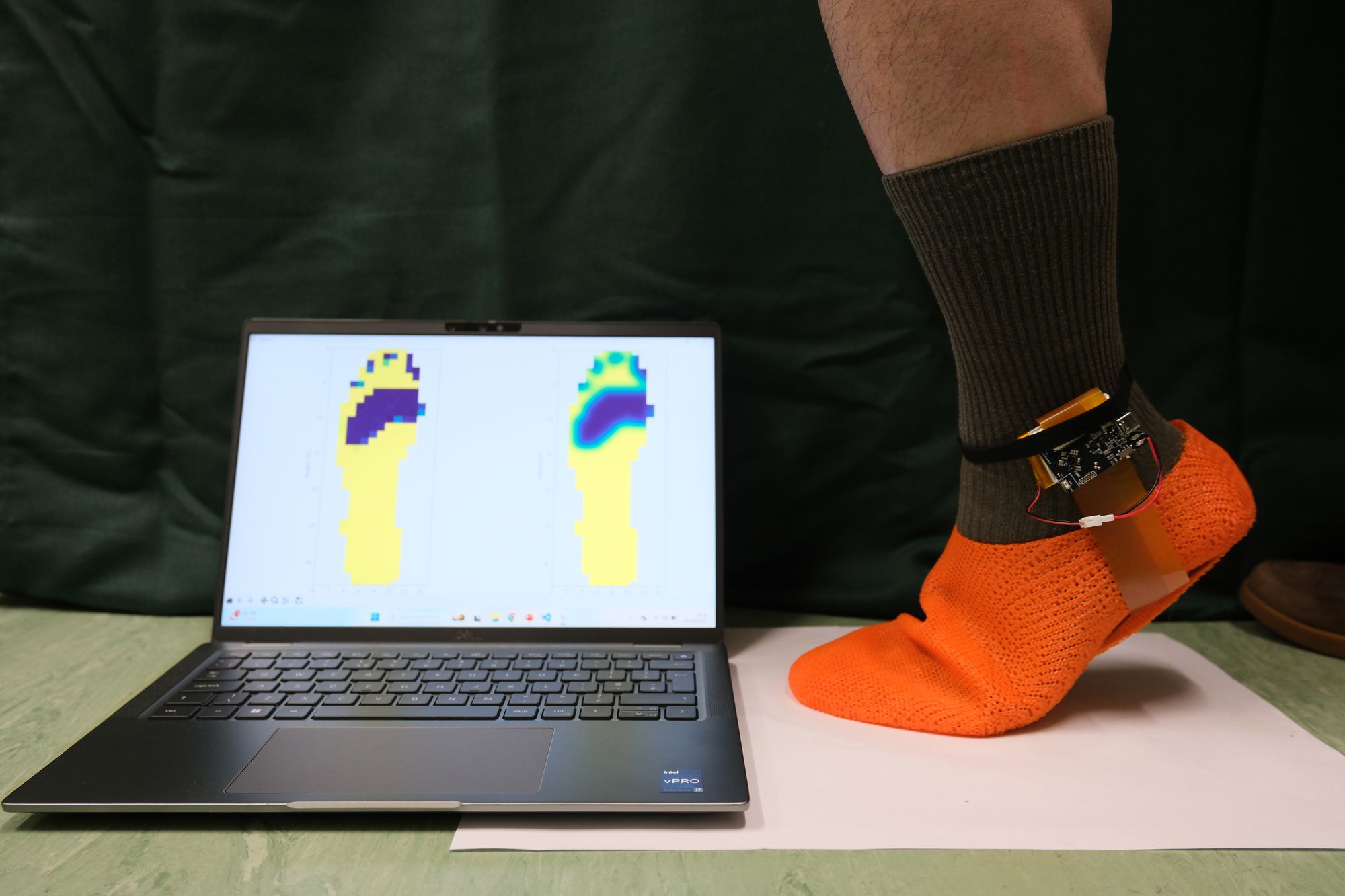

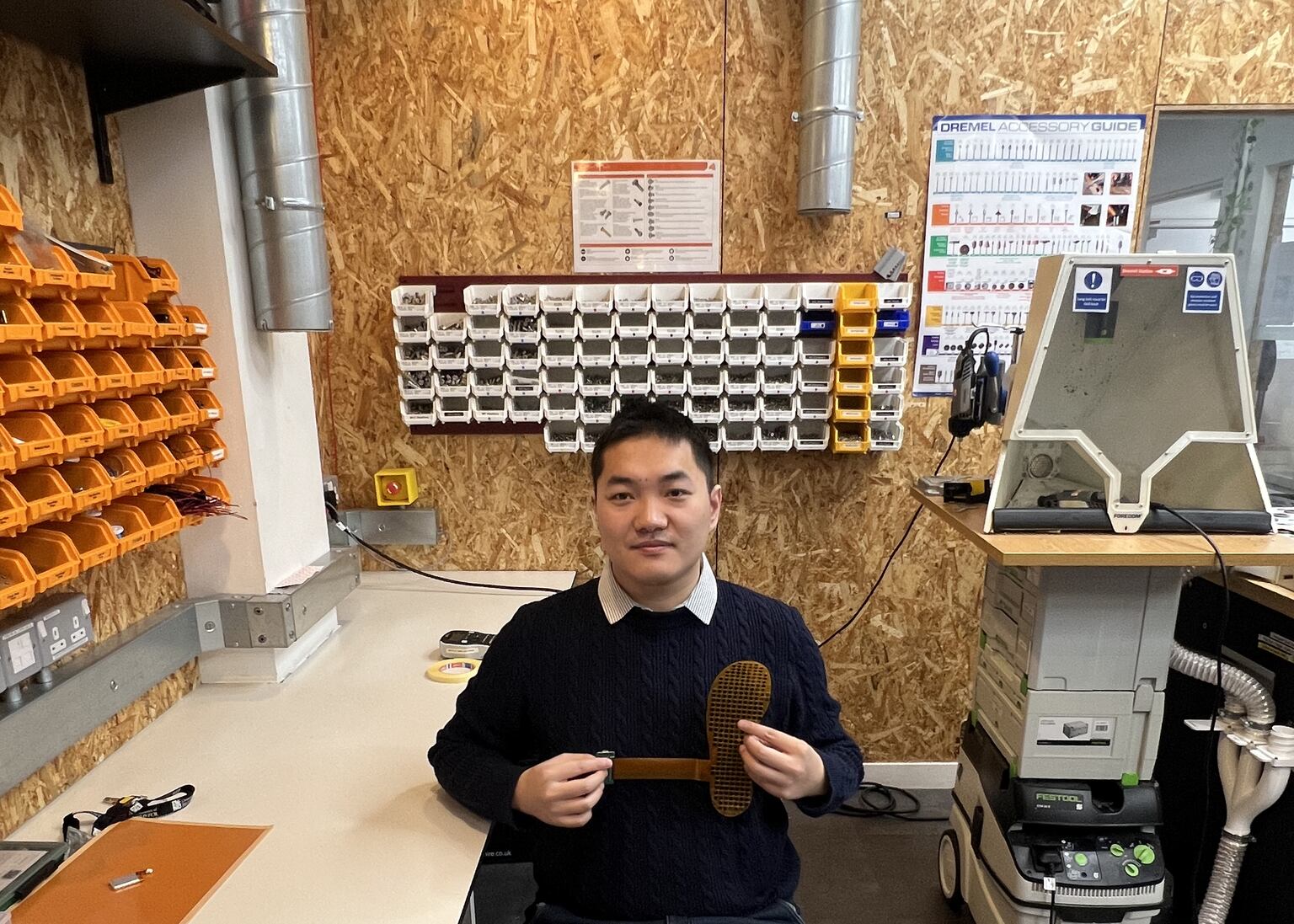

Peter Langlois’s special shoe features an ingenious insole embedded with hundreds of tiny sensors offering lab-quality, real-time data about his gait which can be displayed on a tablet or mobile phone.

The concept is so clever that University of Bristol inventor Dr Jiayang Li’s prototype will be demonstrated this week to industry experts.

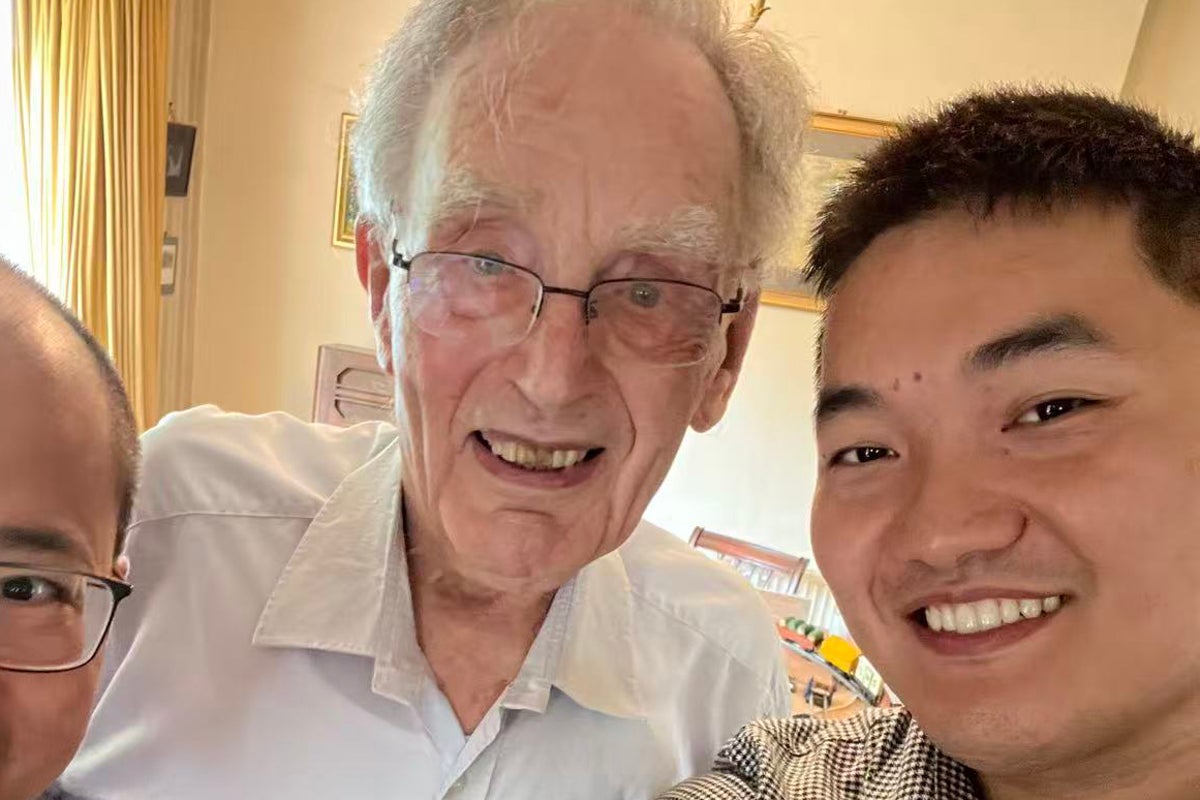

Dr Li, a lecturer in electrical engineering, said: “Peter has been a huge champion of my work since I started my PhD and it’s amazing that he still meticulously edits the research papers of my research group even at the age of 89.

“His mind remains extremely sharp and his dedication is so inspiring.

“One day I noticed he was unsteady on his feet and almost lost his balance.

“It got me thinking this is very risky and could have terrible consequences if it resulted in a fall, especially for people who live alone.

“Then I wondered if the semiconductor technology we’re working on might actually be able to help.”

Dr Li’s previous work developed advanced sensors to more accurately measure people’s lung function and pinpoint how their breathing is restricted.

“I realised we could apply similar techniques to monitor how well people are walking,” he said.

“Mapping their leg gestures in detail could detect risk of falls, helping people like Peter stay safe while also keeping their independence at home.

“Although this highly detailed analysis could be obtained in hospital, the challenge was to make the technology more mobile and accessible in everyday life.

“That’s what makes our shoe so special and such a huge leap forward.”

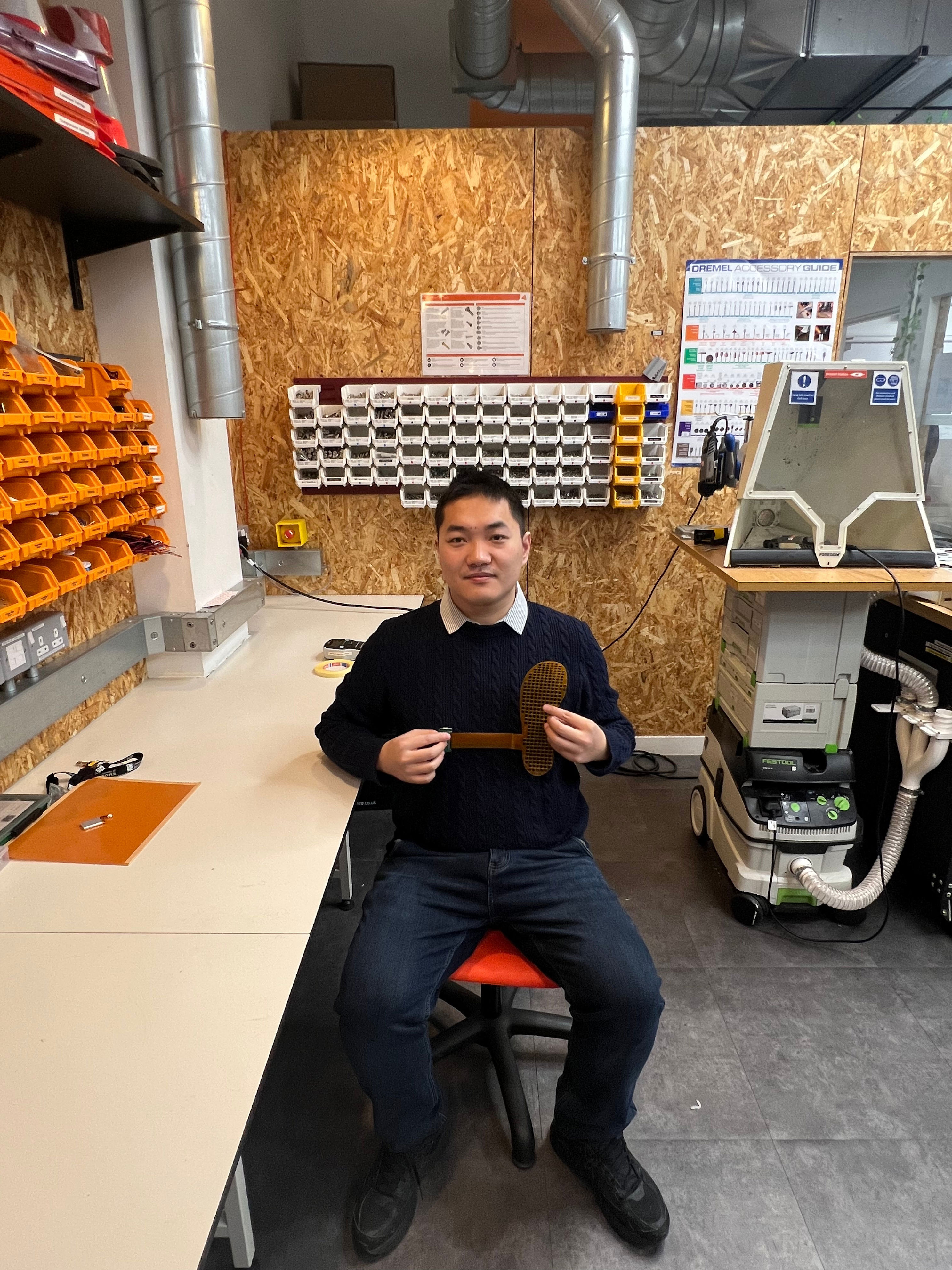

The science involved creating an advanced microchip – also known as a semiconductor – to read all 253 of the tiny sensors on the shoe sole simultaneously.

The data gathered is used to generate images of the person’s foot, highlighting pressure points and assessing whether they are walking in a balanced way or in danger of falling.

To make the device user-friendly, it runs on a low-voltage battery so it can in principle be powered by small screen devices, including a mobile phone or even a smart watch.

“The power of the microchip is just 100 microwatts so the device could run for around three months before it needs recharging,” Dr Li said.

“Fall prevention is a huge challenge for ageing populations, so the potential to anticipate and avoid that happening with our invention is really exciting.

“When I explained the concept to Peter, he was really touched and is pleased it might one day be manufactured and used to help so many people.”

The science behind the device will be showcased at the Institute of Electrical and Electronics Engineers conference on Wednesday.

“The concept could easily be mass produced, creating a low-cost shoe sole which could transform older people’s lives,” Dr Li said.

“Next, we’ll run a formal clinical evaluation with a larger and more diverse group to validate how well it predicts fall risk, refine the analysis provided by the device it’s connected to, and work with clinical and industry partners to translate it into a scalable product.”